반응형

Pix2Pix 실습

- 논문 제목: Image-to-Image Translation with Conditional Adversarial Networks (CVPR 2017)

- 대표적인 이미지간 도메인 변환(Translation) 기술인 Pix2Pix 모델을 학습해보는 실습을 진행합니다.

- 학습 데이터셋: Facade (3 X 256 X 256)

필요한 라이브러리 불러오기

- 실습을 위한 PyTorch 라이브러리를 불러옵니다.

import os

import glob

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import torch

import torch.nn as nn

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from torchvision.utils import save_image학습 데이터셋 불러오기

- 학습을 위해 Facade 데이터셋을 불러옵니다.

%%capture

!wget http://efrosgans.eecs.berkeley.edu/pix2pix/datasets/facades.tar.gz -O facades.tar.gz

!tar -zxvf facades.tar.gz -C ./print("학습 데이터셋 A와 B의 개수:", len(next(os.walk('./facades/train/'))[2]))

print("평가 데이터셋 A와 B의 개수:", len(next(os.walk('./facades/val/'))[2]))

print("테스트 데이터셋 A와 B의 개수:", len(next(os.walk('./facades/test/'))[2]))학습 데이터셋 A와 B의 개수: 400

평가 데이터셋 A와 B의 개수: 100

테스트 데이터셋 A와 B의 개수: 106- 학습 데이터셋을 출력해 봅시다.

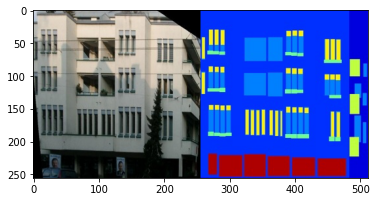

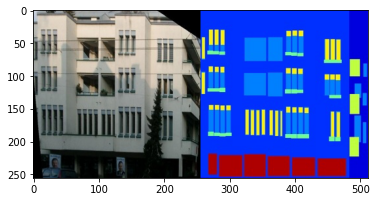

# 한 쌍의 이미지 출력(왼쪽은 정답 이미지, 오른쪽은 조건 이미지)

image = Image.open('./facades/train/1.jpg')

print("이미지 크기:", image.size)

plt.imshow(image)

plt.show()이미지 크기: (512, 256)

- 커스텀 데이터셋(Custom Dataset) 클래스를 정의합니다.

class ImageDataset(Dataset):

def __init__(self, root, transforms_=None, mode="train"):

self.transform = transforms_

self.files = sorted(glob.glob(os.path.join(root, mode) + "/*.jpg"))

# 데이터의 개수가 적기 때문에 테스트 데이터를 학습 시기에 사용

if mode == "train":

self.files.extend(sorted(glob.glob(os.path.join(root, "test") + "/*.jpg")))

def __getitem__(self, index):

img = Image.open(self.files[index % len(self.files)])

w, h = img.size

img_A = img.crop((0, 0, w / 2, h)) # 이미지의 왼쪽 절반

img_B = img.crop((w / 2, 0, w, h)) # 이미지의 오른쪽 절반

# 데이터 증진(data augmentation)을 위한 좌우 반전(horizontal flip)

if np.random.random() < 0.5:

img_A = Image.fromarray(np.array(img_A)[:, ::-1, :], "RGB")

img_B = Image.fromarray(np.array(img_B)[:, ::-1, :], "RGB")

img_A = self.transform(img_A)

img_B = self.transform(img_B)

return {"A": img_A, "B": img_B}

def __len__(self):

return len(self.files)transforms_ = transforms.Compose([

transforms.Resize((256, 256), Image.BICUBIC),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

train_dataset = ImageDataset("facades", transforms_=transforms_)

val_dataset = ImageDataset("facades", transforms_=transforms_)

train_dataloader = DataLoader(train_dataset, batch_size=10, shuffle=True, num_workers=4)

val_dataloader = DataLoader(val_dataset, batch_size=10, shuffle=True, num_workers=4)/usr/local/lib/python3.7/dist-packages/torchvision/transforms/transforms.py:288: UserWarning: Argument interpolation should be of type InterpolationMode instead of int. Please, use InterpolationMode enum.

"Argument interpolation should be of type InterpolationMode instead of int. "

/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py:481: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

cpuset_checked))생성자(Generator) 및 판별자(Discriminator) 모델 정의

# U-Net 아키텍처의 다운 샘플링(Down Sampling) 모듈

class UNetDown(nn.Module):

def __init__(self, in_channels, out_channels, normalize=True, dropout=0.0):

super(UNetDown, self).__init__()

# 너비와 높이가 2배씩 감소

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=4, stride=2, padding=1, bias=False)]

if normalize:

layers.append(nn.InstanceNorm2d(out_channels))

layers.append(nn.LeakyReLU(0.2))

if dropout:

layers.append(nn.Dropout(dropout))

self.model = nn.Sequential(*layers)

def forward(self, x):

return self.model(x)

# U-Net 아키텍처의 업 샘플링(Up Sampling) 모듈: Skip Connection 입력 사용

class UNetUp(nn.Module):

def __init__(self, in_channels, out_channels, dropout=0.0):

super(UNetUp, self).__init__()

# 너비와 높이가 2배씩 증가

layers = [nn.ConvTranspose2d(in_channels, out_channels, kernel_size=4, stride=2, padding=1, bias=False)]

layers.append(nn.InstanceNorm2d(out_channels))

layers.append(nn.ReLU(inplace=True))

if dropout:

layers.append(nn.Dropout(dropout))

self.model = nn.Sequential(*layers)

def forward(self, x, skip_input):

x = self.model(x)

x = torch.cat((x, skip_input), 1) # 채널 레벨에서 합치기(concatenation)

return x

# U-Net 생성자(Generator) 아키텍처

class GeneratorUNet(nn.Module):

def __init__(self, in_channels=3, out_channels=3):

super(GeneratorUNet, self).__init__()

self.down1 = UNetDown(in_channels, 64, normalize=False) # 출력: [64 X 128 X 128]

self.down2 = UNetDown(64, 128) # 출력: [128 X 64 X 64]

self.down3 = UNetDown(128, 256) # 출력: [256 X 32 X 32]

self.down4 = UNetDown(256, 512, dropout=0.5) # 출력: [512 X 16 X 16]

self.down5 = UNetDown(512, 512, dropout=0.5) # 출력: [512 X 8 X 8]

self.down6 = UNetDown(512, 512, dropout=0.5) # 출력: [512 X 4 X 4]

self.down7 = UNetDown(512, 512, dropout=0.5) # 출력: [512 X 2 X 2]

self.down8 = UNetDown(512, 512, normalize=False, dropout=0.5) # 출력: [512 X 1 X 1]

# Skip Connection 사용(출력 채널의 크기 X 2 == 다음 입력 채널의 크기)

self.up1 = UNetUp(512, 512, dropout=0.5) # 출력: [1024 X 2 X 2]

self.up2 = UNetUp(1024, 512, dropout=0.5) # 출력: [1024 X 4 X 4]

self.up3 = UNetUp(1024, 512, dropout=0.5) # 출력: [1024 X 8 X 8]

self.up4 = UNetUp(1024, 512, dropout=0.5) # 출력: [1024 X 16 X 16]

self.up5 = UNetUp(1024, 256) # 출력: [512 X 32 X 32]

self.up6 = UNetUp(512, 128) # 출력: [256 X 64 X 64]

self.up7 = UNetUp(256, 64) # 출력: [128 X 128 X 128]

self.final = nn.Sequential(

nn.Upsample(scale_factor=2), # 출력: [128 X 256 X 256]

nn.ZeroPad2d((1, 0, 1, 0)),

nn.Conv2d(128, out_channels, kernel_size=4, padding=1), # 출력: [3 X 256 X 256]

nn.Tanh(),

)

def forward(self, x):

# 인코더부터 디코더까지 순전파하는 U-Net 생성자(Generator)

d1 = self.down1(x)

d2 = self.down2(d1)

d3 = self.down3(d2)

d4 = self.down4(d3)

d5 = self.down5(d4)

d6 = self.down6(d5)

d7 = self.down7(d6)

d8 = self.down8(d7)

u1 = self.up1(d8, d7)

u2 = self.up2(u1, d6)

u3 = self.up3(u2, d5)

u4 = self.up4(u3, d4)

u5 = self.up5(u4, d3)

u6 = self.up6(u5, d2)

u7 = self.up7(u6, d1)

return self.final(u7)

# U-Net 판별자(Discriminator) 아키텍처

class Discriminator(nn.Module):

def __init__(self, in_channels=3):

super(Discriminator, self).__init__()

def discriminator_block(in_channels, out_channels, normalization=True):

# 너비와 높이가 2배씩 감소

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=4, stride=2, padding=1)]

if normalization:

layers.append(nn.InstanceNorm2d(out_channels))

layers.append(nn.LeakyReLU(0.2, inplace=True))

return layers

self.model = nn.Sequential(

# 두 개의 이미지(실제/변환된 이미지, 조건 이미지)를 입력 받으므로 입력 채널의 크기는 2배

*discriminator_block(in_channels * 2, 64, normalization=False), # 출력: [64 X 128 X 128]

*discriminator_block(64, 128), # 출력: [128 X 64 X 64]

*discriminator_block(128, 256), # 출력: [256 X 32 X 32]

*discriminator_block(256, 512), # 출력: [512 X 16 X 16]

nn.ZeroPad2d((1, 0, 1, 0)),

nn.Conv2d(512, 1, kernel_size=4, padding=1, bias=False) # 출력: [1 X 16 X 16]

)

# img_A: 실제/변환된 이미지, img_B: 조건(condition)

def forward(self, img_A, img_B):

# 이미지 두 개를 채널 레벨에서 연결하여(concatenate) 입력 데이터 생성

img_input = torch.cat((img_A, img_B), 1)

return self.model(img_input)모델 학습 및 샘플링

- 학습을 위해 생성자와 판별자 모델을 초기화합니다.

- 적절한 하이퍼 파라미터를 설정합니다.

def weights_init_normal(m):

classname = m.__class__.__name__

if classname.find("Conv") != -1:

torch.nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find("BatchNorm2d") != -1:

torch.nn.init.normal_(m.weight.data, 1.0, 0.02)

torch.nn.init.constant_(m.bias.data, 0.0)

# 생성자(generator)와 판별자(discriminator) 초기화

generator = GeneratorUNet()

discriminator = Discriminator()

generator.cuda()

discriminator.cuda()

# 가중치(weights) 초기화

generator.apply(weights_init_normal)

discriminator.apply(weights_init_normal)

# 손실 함수(loss function)

criterion_GAN = torch.nn.MSELoss()

criterion_pixelwise = torch.nn.L1Loss()

criterion_GAN.cuda()

criterion_pixelwise.cuda()

# 학습률(learning rate) 설정

lr = 0.0002

# 생성자와 판별자를 위한 최적화 함수

optimizer_G = torch.optim.Adam(generator.parameters(), lr=lr, betas=(0.5, 0.999))

optimizer_D = torch.optim.Adam(discriminator.parameters(), lr=lr, betas=(0.5, 0.999))- 모델을 학습하면서 주기적으로 샘플링하여 결과를 확인할 수 있습니다.

import time

n_epochs = 200 # 학습의 횟수(epoch) 설정

sample_interval = 200 # 몇 번의 배치(batch)마다 결과를 출력할 것인지 설정

# 변환된 이미지와 정답 이미지 사이의 L1 픽셀 단위(pixel-wise) 손실 가중치(weight) 파라미터

lambda_pixel = 100

start_time = time.time()

for epoch in range(n_epochs):

for i, batch in enumerate(train_dataloader):

# 모델의 입력(input) 데이터 불러오기

real_A = batch["B"].cuda()

real_B = batch["A"].cuda()

# 진짜(real) 이미지와 가짜(fake) 이미지에 대한 정답 레이블 생성 (너바와 높이를 16씩 나눈 크기)

real = torch.cuda.FloatTensor(real_A.size(0), 1, 16, 16).fill_(1.0) # 진짜(real): 1

fake = torch.cuda.FloatTensor(real_A.size(0), 1, 16, 16).fill_(0.0) # 가짜(fake): 0

""" 생성자(generator)를 학습합니다. """

optimizer_G.zero_grad()

# 이미지 생성

fake_B = generator(real_A)

# 생성자(generator)의 손실(loss) 값 계산

loss_GAN = criterion_GAN(discriminator(fake_B, real_A), real)

# 픽셀 단위(pixel-wise) L1 손실 값 계산

loss_pixel = criterion_pixelwise(fake_B, real_B)

# 최종적인 손실(loss)

loss_G = loss_GAN + lambda_pixel * loss_pixel

# 생성자(generator) 업데이트

loss_G.backward()

optimizer_G.step()

""" 판별자(discriminator)를 학습합니다. """

optimizer_D.zero_grad()

# 판별자(discriminator)의 손실(loss) 값 계산

loss_real = criterion_GAN(discriminator(real_B, real_A), real) # 조건(condition): real_A

loss_fake = criterion_GAN(discriminator(fake_B.detach(), real_A), fake)

loss_D = (loss_real + loss_fake) / 2

# 판별자(discriminator) 업데이트

loss_D.backward()

optimizer_D.step()

done = epoch * len(train_dataloader) + i

if done % sample_interval == 0:

imgs = next(iter(val_dataloader)) # 10개의 이미지를 추출해 생성

real_A = imgs["B"].cuda()

real_B = imgs["A"].cuda()

fake_B = generator(real_A)

# real_A: 조건(condition), fake_B: 변환된 이미지(translated image), real_B: 정답 이미지

img_sample = torch.cat((real_A.data, fake_B.data, real_B.data), -2) # 높이(height)를 기준으로 이미지를 연결하기

save_image(img_sample, f"{done}.png", nrow=5, normalize=True)

# 하나의 epoch이 끝날 때마다 로그(log) 출력

print(f"[Epoch {epoch}/{n_epochs}] [D loss: {loss_D.item():.6f}] [G pixel loss: {loss_pixel.item():.6f}, adv loss: {loss_GAN.item()}] [Elapsed time: {time.time() - start_time:.2f}s]")/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py:481: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

cpuset_checked))

[Epoch 0/200] [D loss: 0.560331] [G pixel loss: 0.329641, adv loss: 0.7809144854545593] [Elapsed time: 52.55s]

[Epoch 1/200] [D loss: 0.225210] [G pixel loss: 0.391308, adv loss: 0.8067110776901245] [Elapsed time: 104.06s]

[Epoch 2/200] [D loss: 0.119736] [G pixel loss: 0.342993, adv loss: 0.9893133640289307] [Elapsed time: 155.51s]

[Epoch 3/200] [D loss: 0.060683] [G pixel loss: 0.366208, adv loss: 1.0320591926574707] [Elapsed time: 208.06s]

[Epoch 4/200] [D loss: 0.070895] [G pixel loss: 0.325048, adv loss: 1.0521727800369263] [Elapsed time: 259.46s]

[Epoch 5/200] [D loss: 0.096604] [G pixel loss: 0.349445, adv loss: 1.1281390190124512] [Elapsed time: 310.94s]

[Epoch 6/200] [D loss: 0.069620] [G pixel loss: 0.333463, adv loss: 0.9519507884979248] [Elapsed time: 362.44s]

[Epoch 7/200] [D loss: 0.057559] [G pixel loss: 0.325070, adv loss: 1.1611394882202148] [Elapsed time: 414.73s]

[Epoch 8/200] [D loss: 0.045692] [G pixel loss: 0.322076, adv loss: 0.9827876091003418] [Elapsed time: 466.17s]

[Epoch 9/200] [D loss: 0.151312] [G pixel loss: 0.358122, adv loss: 1.4437382221221924] [Elapsed time: 517.91s]

[Epoch 10/200] [D loss: 0.077470] [G pixel loss: 0.306726, adv loss: 0.6923846006393433] [Elapsed time: 569.27s]

[Epoch 11/200] [D loss: 0.192081] [G pixel loss: 0.296288, adv loss: 1.4209017753601074] [Elapsed time: 621.59s]

[Epoch 12/200] [D loss: 0.063872] [G pixel loss: 0.324469, adv loss: 0.6795710325241089] [Elapsed time: 673.34s]

[Epoch 13/200] [D loss: 0.102404] [G pixel loss: 0.362862, adv loss: 0.4977836310863495] [Elapsed time: 724.73s]

[Epoch 14/200] [D loss: 0.121900] [G pixel loss: 0.375523, adv loss: 0.4150375723838806] [Elapsed time: 776.47s]

[Epoch 15/200] [D loss: 0.113150] [G pixel loss: 0.362901, adv loss: 1.6324083805084229] [Elapsed time: 829.01s]

[Epoch 16/200] [D loss: 0.150540] [G pixel loss: 0.325291, adv loss: 0.402769535779953] [Elapsed time: 880.57s]

[Epoch 17/200] [D loss: 0.083624] [G pixel loss: 0.274319, adv loss: 0.5665050745010376] [Elapsed time: 931.91s]

[Epoch 18/200] [D loss: 0.046647] [G pixel loss: 0.292543, adv loss: 0.917290210723877] [Elapsed time: 983.66s]

[Epoch 19/200] [D loss: 0.105786] [G pixel loss: 0.335462, adv loss: 1.3565210103988647] [Elapsed time: 1036.06s]

[Epoch 20/200] [D loss: 0.059561] [G pixel loss: 0.362326, adv loss: 1.1688989400863647] [Elapsed time: 1087.80s]

[Epoch 21/200] [D loss: 0.034316] [G pixel loss: 0.321345, adv loss: 0.8693038821220398] [Elapsed time: 1139.12s]

[Epoch 22/200] [D loss: 0.043167] [G pixel loss: 0.285502, adv loss: 0.8347434997558594] [Elapsed time: 1190.45s]

[Epoch 23/200] [D loss: 0.098935] [G pixel loss: 0.331274, adv loss: 0.5105571150779724] [Elapsed time: 1242.71s]

[Epoch 24/200] [D loss: 0.035406] [G pixel loss: 0.345680, adv loss: 0.7654179334640503] [Elapsed time: 1294.05s]

[Epoch 25/200] [D loss: 0.057263] [G pixel loss: 0.305916, adv loss: 0.6176649332046509] [Elapsed time: 1345.79s]

[Epoch 26/200] [D loss: 0.041583] [G pixel loss: 0.359302, adv loss: 1.0313990116119385] [Elapsed time: 1397.18s]

[Epoch 27/200] [D loss: 0.099395] [G pixel loss: 0.292495, adv loss: 1.4479920864105225] [Elapsed time: 1449.49s]

[Epoch 28/200] [D loss: 0.065432] [G pixel loss: 0.268309, adv loss: 0.8113459348678589] [Elapsed time: 1500.80s]

[Epoch 29/200] [D loss: 0.037296] [G pixel loss: 0.308611, adv loss: 1.0982780456542969] [Elapsed time: 1552.54s]

[Epoch 30/200] [D loss: 0.064119] [G pixel loss: 0.264285, adv loss: 1.2817949056625366] [Elapsed time: 1603.88s]

[Epoch 31/200] [D loss: 0.040339] [G pixel loss: 0.293402, adv loss: 0.7894832491874695] [Elapsed time: 1656.20s]

[Epoch 32/200] [D loss: 0.037840] [G pixel loss: 0.266078, adv loss: 1.0674517154693604] [Elapsed time: 1707.93s]

[Epoch 33/200] [D loss: 0.029823] [G pixel loss: 0.276577, adv loss: 1.0156080722808838] [Elapsed time: 1759.70s]

[Epoch 34/200] [D loss: 0.039911] [G pixel loss: 0.245282, adv loss: 0.8676483035087585] [Elapsed time: 1811.09s]

[Epoch 35/200] [D loss: 0.068004] [G pixel loss: 0.285075, adv loss: 1.2472418546676636] [Elapsed time: 1863.44s]

[Epoch 36/200] [D loss: 0.035850] [G pixel loss: 0.250332, adv loss: 0.8476670980453491] [Elapsed time: 1914.73s]

[Epoch 37/200] [D loss: 0.052470] [G pixel loss: 0.239629, adv loss: 1.1259958744049072] [Elapsed time: 1966.05s]

[Epoch 38/200] [D loss: 0.046650] [G pixel loss: 0.310080, adv loss: 0.7120026350021362] [Elapsed time: 2017.34s]

[Epoch 39/200] [D loss: 0.043057] [G pixel loss: 0.250561, adv loss: 1.0331612825393677] [Elapsed time: 2069.61s]

[Epoch 40/200] [D loss: 0.075888] [G pixel loss: 0.275628, adv loss: 1.0048232078552246] [Elapsed time: 2120.96s]

[Epoch 41/200] [D loss: 0.019193] [G pixel loss: 0.287058, adv loss: 1.0274113416671753] [Elapsed time: 2172.25s]

[Epoch 42/200] [D loss: 0.056119] [G pixel loss: 0.239393, adv loss: 0.8167617321014404] [Elapsed time: 2223.56s]

[Epoch 43/200] [D loss: 0.015883] [G pixel loss: 0.267198, adv loss: 0.9468421339988708] [Elapsed time: 2275.82s]

[Epoch 44/200] [D loss: 0.018044] [G pixel loss: 0.244496, adv loss: 0.8832151293754578] [Elapsed time: 2327.18s]

[Epoch 45/200] [D loss: 0.235572] [G pixel loss: 0.238352, adv loss: 1.3980501890182495] [Elapsed time: 2378.48s]

[Epoch 46/200] [D loss: 0.063973] [G pixel loss: 0.248627, adv loss: 0.6057321429252625] [Elapsed time: 2429.77s]

[Epoch 47/200] [D loss: 0.039086] [G pixel loss: 0.271331, adv loss: 0.7949753999710083] [Elapsed time: 2481.91s]

[Epoch 48/200] [D loss: 0.024581] [G pixel loss: 0.236724, adv loss: 1.1094224452972412] [Elapsed time: 2533.39s]

[Epoch 49/200] [D loss: 0.030717] [G pixel loss: 0.232298, adv loss: 1.0013574361801147] [Elapsed time: 2584.71s]

[Epoch 50/200] [D loss: 0.037827] [G pixel loss: 0.232936, adv loss: 0.7798885107040405] [Elapsed time: 2637.19s]

[Epoch 51/200] [D loss: 0.197720] [G pixel loss: 0.249178, adv loss: 1.7204818725585938] [Elapsed time: 2688.46s]

[Epoch 52/200] [D loss: 0.045382] [G pixel loss: 0.229518, adv loss: 0.6314181089401245] [Elapsed time: 2739.82s]

[Epoch 53/200] [D loss: 0.031806] [G pixel loss: 0.210802, adv loss: 0.8526821136474609] [Elapsed time: 2791.08s]

[Epoch 54/200] [D loss: 0.025265] [G pixel loss: 0.238826, adv loss: 1.0513064861297607] [Elapsed time: 2843.27s]

[Epoch 55/200] [D loss: 0.068328] [G pixel loss: 0.244290, adv loss: 1.0136446952819824] [Elapsed time: 2894.73s]

[Epoch 56/200] [D loss: 0.070652] [G pixel loss: 0.234325, adv loss: 1.2297039031982422] [Elapsed time: 2946.00s]

[Epoch 57/200] [D loss: 0.011286] [G pixel loss: 0.252570, adv loss: 0.9364079236984253] [Elapsed time: 2997.37s]

[Epoch 58/200] [D loss: 0.059667] [G pixel loss: 0.254764, adv loss: 0.5805401802062988] [Elapsed time: 3049.54s]

[Epoch 59/200] [D loss: 0.015108] [G pixel loss: 0.251916, adv loss: 0.8374917507171631] [Elapsed time: 3100.90s]

[Epoch 60/200] [D loss: 0.022445] [G pixel loss: 0.241719, adv loss: 0.970120906829834] [Elapsed time: 3152.20s]

[Epoch 61/200] [D loss: 0.039313] [G pixel loss: 0.232719, adv loss: 0.8198463916778564] [Elapsed time: 3203.64s]

[Epoch 62/200] [D loss: 0.142251] [G pixel loss: 0.223315, adv loss: 1.3460773229599] [Elapsed time: 3255.82s]

[Epoch 63/200] [D loss: 0.015553] [G pixel loss: 0.222825, adv loss: 0.9202275276184082] [Elapsed time: 3307.14s]

[Epoch 64/200] [D loss: 0.033355] [G pixel loss: 0.226606, adv loss: 0.7465857863426208] [Elapsed time: 3358.52s]

[Epoch 65/200] [D loss: 0.013728] [G pixel loss: 0.239810, adv loss: 0.9405672550201416] [Elapsed time: 3409.86s]

[Epoch 66/200] [D loss: 0.016313] [G pixel loss: 0.181129, adv loss: 1.0011255741119385] [Elapsed time: 3462.21s]

[Epoch 67/200] [D loss: 0.025285] [G pixel loss: 0.206227, adv loss: 0.7360242605209351] [Elapsed time: 3513.73s]

[Epoch 68/200] [D loss: 0.255739] [G pixel loss: 0.220748, adv loss: 1.6242244243621826] [Elapsed time: 3565.03s]

[Epoch 69/200] [D loss: 0.019811] [G pixel loss: 0.198161, adv loss: 1.0887689590454102] [Elapsed time: 3616.33s]

[Epoch 70/200] [D loss: 0.075466] [G pixel loss: 0.223438, adv loss: 0.543390154838562] [Elapsed time: 3668.60s]

[Epoch 71/200] [D loss: 0.013542] [G pixel loss: 0.238059, adv loss: 1.006549596786499] [Elapsed time: 3719.94s]

[Epoch 72/200] [D loss: 0.077583] [G pixel loss: 0.226773, adv loss: 1.3163520097732544] [Elapsed time: 3771.39s]

[Epoch 73/200] [D loss: 0.013092] [G pixel loss: 0.238897, adv loss: 1.190638780593872] [Elapsed time: 3822.70s]

[Epoch 74/200] [D loss: 0.009091] [G pixel loss: 0.234385, adv loss: 0.9991838335990906] [Elapsed time: 3874.98s]

[Epoch 75/200] [D loss: 0.018546] [G pixel loss: 0.202138, adv loss: 1.0036548376083374] [Elapsed time: 3926.32s]

[Epoch 76/200] [D loss: 0.012390] [G pixel loss: 0.273573, adv loss: 1.196258783340454] [Elapsed time: 3977.60s]

[Epoch 77/200] [D loss: 0.053398] [G pixel loss: 0.204982, adv loss: 0.9612450003623962] [Elapsed time: 4028.89s]

[Epoch 78/200] [D loss: 0.023748] [G pixel loss: 0.252105, adv loss: 1.034123182296753] [Elapsed time: 4081.15s]

[Epoch 79/200] [D loss: 0.041500] [G pixel loss: 0.234307, adv loss: 0.7551460266113281] [Elapsed time: 4132.41s]

[Epoch 80/200] [D loss: 0.011924] [G pixel loss: 0.205748, adv loss: 0.8598144054412842] [Elapsed time: 4183.69s]

[Epoch 81/200] [D loss: 0.022264] [G pixel loss: 0.195362, adv loss: 0.7817422151565552] [Elapsed time: 4234.97s]

[Epoch 82/200] [D loss: 0.022248] [G pixel loss: 0.210430, adv loss: 0.9987583160400391] [Elapsed time: 4287.27s]

[Epoch 83/200] [D loss: 0.020435] [G pixel loss: 0.194591, adv loss: 0.8743090629577637] [Elapsed time: 4338.59s]

[Epoch 84/200] [D loss: 0.017068] [G pixel loss: 0.238381, adv loss: 1.213318109512329] [Elapsed time: 4389.87s]

[Epoch 85/200] [D loss: 0.009264] [G pixel loss: 0.235362, adv loss: 1.0141481161117554] [Elapsed time: 4441.21s]

[Epoch 86/200] [D loss: 0.007933] [G pixel loss: 0.197306, adv loss: 1.0975708961486816] [Elapsed time: 4493.47s]

[Epoch 87/200] [D loss: 0.017420] [G pixel loss: 0.186257, adv loss: 1.0237855911254883] [Elapsed time: 4544.76s]

[Epoch 88/200] [D loss: 0.009214] [G pixel loss: 0.176523, adv loss: 0.8813095092773438] [Elapsed time: 4596.08s]

[Epoch 89/200] [D loss: 0.037060] [G pixel loss: 0.208598, adv loss: 1.0207114219665527] [Elapsed time: 4647.37s]

[Epoch 90/200] [D loss: 0.087688] [G pixel loss: 0.218045, adv loss: 0.9575861692428589] [Elapsed time: 4699.67s]

[Epoch 91/200] [D loss: 0.005023] [G pixel loss: 0.201437, adv loss: 0.9658225774765015] [Elapsed time: 4750.97s]

[Epoch 92/200] [D loss: 0.010896] [G pixel loss: 0.228045, adv loss: 0.937142014503479] [Elapsed time: 4802.32s]

[Epoch 93/200] [D loss: 0.007608] [G pixel loss: 0.220617, adv loss: 1.0633344650268555] [Elapsed time: 4853.62s]

[Epoch 94/200] [D loss: 0.006051] [G pixel loss: 0.177682, adv loss: 1.074628233909607] [Elapsed time: 4905.76s]

[Epoch 95/200] [D loss: 0.008426] [G pixel loss: 0.209551, adv loss: 1.0892541408538818] [Elapsed time: 4957.07s]

[Epoch 96/200] [D loss: 0.007335] [G pixel loss: 0.171409, adv loss: 0.9790999889373779] [Elapsed time: 5008.52s]

[Epoch 97/200] [D loss: 0.007518] [G pixel loss: 0.193798, adv loss: 1.0433874130249023] [Elapsed time: 5059.98s]

[Epoch 98/200] [D loss: 0.003709] [G pixel loss: 0.208771, adv loss: 0.9659861326217651] [Elapsed time: 5112.29s]

[Epoch 99/200] [D loss: 0.011339] [G pixel loss: 0.206002, adv loss: 1.0790441036224365] [Elapsed time: 5163.60s]

[Epoch 100/200] [D loss: 0.004710] [G pixel loss: 0.219063, adv loss: 0.9879229068756104] [Elapsed time: 5214.91s]

[Epoch 101/200] [D loss: 0.003712] [G pixel loss: 0.212145, adv loss: 0.9768645167350769] [Elapsed time: 5267.23s]

[Epoch 102/200] [D loss: 0.007433] [G pixel loss: 0.172307, adv loss: 1.031888484954834] [Elapsed time: 5318.52s]

[Epoch 103/200] [D loss: 0.020805] [G pixel loss: 0.191080, adv loss: 1.0496633052825928] [Elapsed time: 5369.82s]

[Epoch 104/200] [D loss: 0.007247] [G pixel loss: 0.206618, adv loss: 0.9623000025749207] [Elapsed time: 5420.75s]

[Epoch 105/200] [D loss: 0.003970] [G pixel loss: 0.219859, adv loss: 1.0321054458618164] [Elapsed time: 5472.85s]

[Epoch 106/200] [D loss: 0.003936] [G pixel loss: 0.173175, adv loss: 0.928221583366394] [Elapsed time: 5523.82s]

[Epoch 107/200] [D loss: 0.003428] [G pixel loss: 0.200371, adv loss: 0.9922566413879395] [Elapsed time: 5574.93s]

[Epoch 108/200] [D loss: 0.001910] [G pixel loss: 0.186697, adv loss: 1.0216944217681885] [Elapsed time: 5625.93s]

[Epoch 109/200] [D loss: 0.031960] [G pixel loss: 0.178305, adv loss: 0.8815109133720398] [Elapsed time: 5678.04s]

[Epoch 110/200] [D loss: 0.008342] [G pixel loss: 0.186416, adv loss: 1.0286873579025269] [Elapsed time: 5729.12s]

[Epoch 111/200] [D loss: 0.002166] [G pixel loss: 0.199634, adv loss: 1.01239013671875] [Elapsed time: 5780.24s]

[Epoch 112/200] [D loss: 0.004280] [G pixel loss: 0.176329, adv loss: 0.9297403693199158] [Elapsed time: 5831.36s]

[Epoch 113/200] [D loss: 0.063017] [G pixel loss: 0.177387, adv loss: 1.0849645137786865] [Elapsed time: 5883.35s]

[Epoch 114/200] [D loss: 0.003249] [G pixel loss: 0.194567, adv loss: 1.00436532497406] [Elapsed time: 5934.61s]

[Epoch 115/200] [D loss: 0.005109] [G pixel loss: 0.189428, adv loss: 0.9345830678939819] [Elapsed time: 5985.77s]

[Epoch 116/200] [D loss: 0.004331] [G pixel loss: 0.179149, adv loss: 0.9682204127311707] [Elapsed time: 6036.99s]

[Epoch 117/200] [D loss: 0.003580] [G pixel loss: 0.188151, adv loss: 1.0148134231567383] [Elapsed time: 6089.29s]

[Epoch 118/200] [D loss: 0.003836] [G pixel loss: 0.189364, adv loss: 1.067288875579834] [Elapsed time: 6140.50s]

[Epoch 119/200] [D loss: 0.005717] [G pixel loss: 0.176801, adv loss: 1.0278544425964355] [Elapsed time: 6191.66s]

[Epoch 120/200] [D loss: 0.244995] [G pixel loss: 0.195286, adv loss: 0.3126675486564636] [Elapsed time: 6242.71s]

[Epoch 121/200] [D loss: 0.014503] [G pixel loss: 0.150147, adv loss: 0.9850532412528992] [Elapsed time: 6294.91s]

[Epoch 122/200] [D loss: 0.012993] [G pixel loss: 0.188707, adv loss: 0.8494478464126587] [Elapsed time: 6346.08s]

[Epoch 123/200] [D loss: 0.003692] [G pixel loss: 0.196611, adv loss: 1.022415041923523] [Elapsed time: 6397.33s]

[Epoch 124/200] [D loss: 0.002938] [G pixel loss: 0.186606, adv loss: 0.9705221652984619] [Elapsed time: 6448.44s]

[Epoch 125/200] [D loss: 0.003459] [G pixel loss: 0.157643, adv loss: 0.998089075088501] [Elapsed time: 6500.63s]

[Epoch 126/200] [D loss: 0.003321] [G pixel loss: 0.180089, adv loss: 0.9894605875015259] [Elapsed time: 6551.80s]

[Epoch 127/200] [D loss: 0.003319] [G pixel loss: 0.173219, adv loss: 1.07439124584198] [Elapsed time: 6602.92s]

[Epoch 128/200] [D loss: 0.004062] [G pixel loss: 0.168607, adv loss: 0.9516044855117798] [Elapsed time: 6654.03s]

[Epoch 129/200] [D loss: 0.001799] [G pixel loss: 0.182086, adv loss: 1.0036576986312866] [Elapsed time: 6706.22s]

[Epoch 130/200] [D loss: 0.003700] [G pixel loss: 0.182998, adv loss: 0.9948679804801941] [Elapsed time: 6757.46s]

[Epoch 131/200] [D loss: 0.002051] [G pixel loss: 0.184224, adv loss: 1.0148701667785645] [Elapsed time: 6808.62s]

[Epoch 132/200] [D loss: 0.002050] [G pixel loss: 0.161753, adv loss: 1.0258421897888184] [Elapsed time: 6859.87s]

[Epoch 133/200] [D loss: 0.004180] [G pixel loss: 0.180295, adv loss: 0.9562324285507202] [Elapsed time: 6912.05s]

[Epoch 134/200] [D loss: 0.007223] [G pixel loss: 0.154781, adv loss: 1.0025012493133545] [Elapsed time: 6963.23s]

[Epoch 135/200] [D loss: 0.002015] [G pixel loss: 0.160908, adv loss: 1.0458091497421265] [Elapsed time: 7014.34s]

[Epoch 136/200] [D loss: 0.002179] [G pixel loss: 0.197046, adv loss: 0.9630111455917358] [Elapsed time: 7065.59s]

[Epoch 137/200] [D loss: 0.007557] [G pixel loss: 0.175208, adv loss: 0.9528471827507019] [Elapsed time: 7117.76s]

[Epoch 138/200] [D loss: 0.001691] [G pixel loss: 0.178104, adv loss: 0.9880306124687195] [Elapsed time: 7169.06s]

[Epoch 139/200] [D loss: 0.002005] [G pixel loss: 0.160446, adv loss: 0.9678410887718201] [Elapsed time: 7220.37s]

[Epoch 140/200] [D loss: 0.002810] [G pixel loss: 0.174819, adv loss: 1.031069040298462] [Elapsed time: 7271.57s]

[Epoch 141/200] [D loss: 0.001502] [G pixel loss: 0.154752, adv loss: 0.9971701502799988] [Elapsed time: 7323.67s]

[Epoch 142/200] [D loss: 0.006515] [G pixel loss: 0.189928, adv loss: 1.035855770111084] [Elapsed time: 7374.91s]

[Epoch 143/200] [D loss: 0.004847] [G pixel loss: 0.165924, adv loss: 0.9961034655570984] [Elapsed time: 7425.99s]

[Epoch 144/200] [D loss: 0.009044] [G pixel loss: 0.162621, adv loss: 0.8941065669059753] [Elapsed time: 7477.21s]

[Epoch 145/200] [D loss: 0.003162] [G pixel loss: 0.171194, adv loss: 0.9725656509399414] [Elapsed time: 7529.38s]

[Epoch 146/200] [D loss: 0.024480] [G pixel loss: 0.185342, adv loss: 1.051523208618164] [Elapsed time: 7580.64s]

[Epoch 147/200] [D loss: 0.002871] [G pixel loss: 0.187870, adv loss: 1.0537431240081787] [Elapsed time: 7631.72s]

[Epoch 148/200] [D loss: 0.002199] [G pixel loss: 0.189337, adv loss: 0.9619858264923096] [Elapsed time: 7682.78s]

[Epoch 149/200] [D loss: 0.001067] [G pixel loss: 0.164013, adv loss: 0.9858932495117188] [Elapsed time: 7735.05s]

[Epoch 150/200] [D loss: 0.001779] [G pixel loss: 0.171176, adv loss: 1.005223035812378] [Elapsed time: 7786.22s]

[Epoch 151/200] [D loss: 0.001912] [G pixel loss: 0.158629, adv loss: 0.9989414215087891] [Elapsed time: 7837.20s]

[Epoch 152/200] [D loss: 0.008751] [G pixel loss: 0.161523, adv loss: 0.9668362140655518] [Elapsed time: 7889.48s]

[Epoch 153/200] [D loss: 0.001939] [G pixel loss: 0.175073, adv loss: 0.9961204528808594] [Elapsed time: 7940.77s]

[Epoch 154/200] [D loss: 0.009195] [G pixel loss: 0.169245, adv loss: 1.1206494569778442] [Elapsed time: 7991.93s]

[Epoch 155/200] [D loss: 0.014832] [G pixel loss: 0.181868, adv loss: 0.820640504360199] [Elapsed time: 8043.18s]

[Epoch 156/200] [D loss: 0.002586] [G pixel loss: 0.155320, adv loss: 1.0349605083465576] [Elapsed time: 8095.54s]

[Epoch 157/200] [D loss: 0.003544] [G pixel loss: 0.200790, adv loss: 1.0289254188537598] [Elapsed time: 8146.79s]

[Epoch 158/200] [D loss: 0.254352] [G pixel loss: 0.159031, adv loss: 0.27421003580093384] [Elapsed time: 8198.09s]

[Epoch 159/200] [D loss: 0.219451] [G pixel loss: 0.169825, adv loss: 0.2683924436569214] [Elapsed time: 8249.27s]

[Epoch 160/200] [D loss: 0.184952] [G pixel loss: 0.168947, adv loss: 0.7836729884147644] [Elapsed time: 8301.47s]

[Epoch 161/200] [D loss: 0.259462] [G pixel loss: 0.177259, adv loss: 0.456488698720932] [Elapsed time: 8352.71s]

[Epoch 162/200] [D loss: 0.191785] [G pixel loss: 0.158424, adv loss: 0.2807987928390503] [Elapsed time: 8403.86s]

[Epoch 163/200] [D loss: 0.155810] [G pixel loss: 0.206074, adv loss: 0.33438992500305176] [Elapsed time: 8454.98s]

[Epoch 164/200] [D loss: 0.076625] [G pixel loss: 0.158268, adv loss: 0.5218112468719482] [Elapsed time: 8507.11s]

[Epoch 165/200] [D loss: 0.118348] [G pixel loss: 0.190158, adv loss: 0.39571043848991394] [Elapsed time: 8558.27s]

[Epoch 166/200] [D loss: 0.076636] [G pixel loss: 0.152891, adv loss: 0.8566246032714844] [Elapsed time: 8609.57s]

[Epoch 167/200] [D loss: 0.091659] [G pixel loss: 0.172221, adv loss: 0.4994926452636719] [Elapsed time: 8660.83s]

[Epoch 168/200] [D loss: 0.127160] [G pixel loss: 0.168381, adv loss: 0.4794643521308899] [Elapsed time: 8713.14s]

[Epoch 169/200] [D loss: 0.059232] [G pixel loss: 0.175637, adv loss: 1.1650193929672241] [Elapsed time: 8764.31s]

[Epoch 170/200] [D loss: 0.063739] [G pixel loss: 0.183563, adv loss: 0.6148388385772705] [Elapsed time: 8815.50s]

[Epoch 171/200] [D loss: 0.023040] [G pixel loss: 0.187005, adv loss: 0.8183310031890869] [Elapsed time: 8866.76s]

[Epoch 172/200] [D loss: 0.030206] [G pixel loss: 0.164602, adv loss: 0.8082995414733887] [Elapsed time: 8918.90s]

[Epoch 173/200] [D loss: 0.049121] [G pixel loss: 0.189175, adv loss: 0.8937274813652039] [Elapsed time: 8970.03s]

[Epoch 174/200] [D loss: 0.038261] [G pixel loss: 0.168446, adv loss: 0.6209759712219238] [Elapsed time: 9021.15s]

[Epoch 175/200] [D loss: 0.035395] [G pixel loss: 0.175294, adv loss: 0.6679251194000244] [Elapsed time: 9072.46s]

[Epoch 176/200] [D loss: 0.033775] [G pixel loss: 0.165349, adv loss: 0.7349603176116943] [Elapsed time: 9124.63s]

[Epoch 177/200] [D loss: 0.020501] [G pixel loss: 0.174264, adv loss: 0.8930066823959351] [Elapsed time: 9175.89s]

[Epoch 178/200] [D loss: 0.089944] [G pixel loss: 0.168758, adv loss: 0.9510349035263062] [Elapsed time: 9227.18s]

[Epoch 179/200] [D loss: 0.022041] [G pixel loss: 0.161430, adv loss: 0.7917999029159546] [Elapsed time: 9278.48s]

[Epoch 180/200] [D loss: 0.028426] [G pixel loss: 0.168608, adv loss: 0.6909393072128296] [Elapsed time: 9330.58s]

[Epoch 181/200] [D loss: 0.018376] [G pixel loss: 0.180802, adv loss: 1.0058307647705078] [Elapsed time: 9381.78s]

[Epoch 182/200] [D loss: 0.020922] [G pixel loss: 0.167025, adv loss: 1.0458407402038574] [Elapsed time: 9432.95s]

[Epoch 183/200] [D loss: 0.041034] [G pixel loss: 0.166084, adv loss: 0.806882381439209] [Elapsed time: 9484.21s]

[Epoch 184/200] [D loss: 0.028059] [G pixel loss: 0.174010, adv loss: 0.8454649448394775] [Elapsed time: 9536.38s]

[Epoch 185/200] [D loss: 0.015850] [G pixel loss: 0.154059, adv loss: 1.0488051176071167] [Elapsed time: 9587.67s]

[Epoch 186/200] [D loss: 0.059642] [G pixel loss: 0.175449, adv loss: 0.9773682355880737] [Elapsed time: 9638.93s]

[Epoch 187/200] [D loss: 0.018942] [G pixel loss: 0.171920, adv loss: 1.0830328464508057] [Elapsed time: 9690.10s]

[Epoch 188/200] [D loss: 0.014164] [G pixel loss: 0.156902, adv loss: 1.0089199542999268] [Elapsed time: 9742.29s]

[Epoch 189/200] [D loss: 0.014628] [G pixel loss: 0.163402, adv loss: 0.7964131236076355] [Elapsed time: 9793.41s]

[Epoch 190/200] [D loss: 0.013994] [G pixel loss: 0.160755, adv loss: 0.9182969927787781] [Elapsed time: 9844.65s]

[Epoch 191/200] [D loss: 0.074617] [G pixel loss: 0.173578, adv loss: 0.5211181640625] [Elapsed time: 9895.92s]

[Epoch 192/200] [D loss: 0.011411] [G pixel loss: 0.171053, adv loss: 1.0480167865753174] [Elapsed time: 9947.94s]

[Epoch 193/200] [D loss: 0.006861] [G pixel loss: 0.169426, adv loss: 1.0266128778457642] [Elapsed time: 9998.81s]

[Epoch 194/200] [D loss: 0.016326] [G pixel loss: 0.139121, adv loss: 0.9775941371917725] [Elapsed time: 10049.83s]

[Epoch 195/200] [D loss: 0.010289] [G pixel loss: 0.164239, adv loss: 0.8970546722412109] [Elapsed time: 10100.88s]

[Epoch 196/200] [D loss: 0.019345] [G pixel loss: 0.176286, adv loss: 0.8734278678894043] [Elapsed time: 10153.18s]

[Epoch 197/200] [D loss: 0.009058] [G pixel loss: 0.161024, adv loss: 0.9567171931266785] [Elapsed time: 10204.25s]

[Epoch 198/200] [D loss: 0.023307] [G pixel loss: 0.153450, adv loss: 1.1096452474594116] [Elapsed time: 10255.27s]

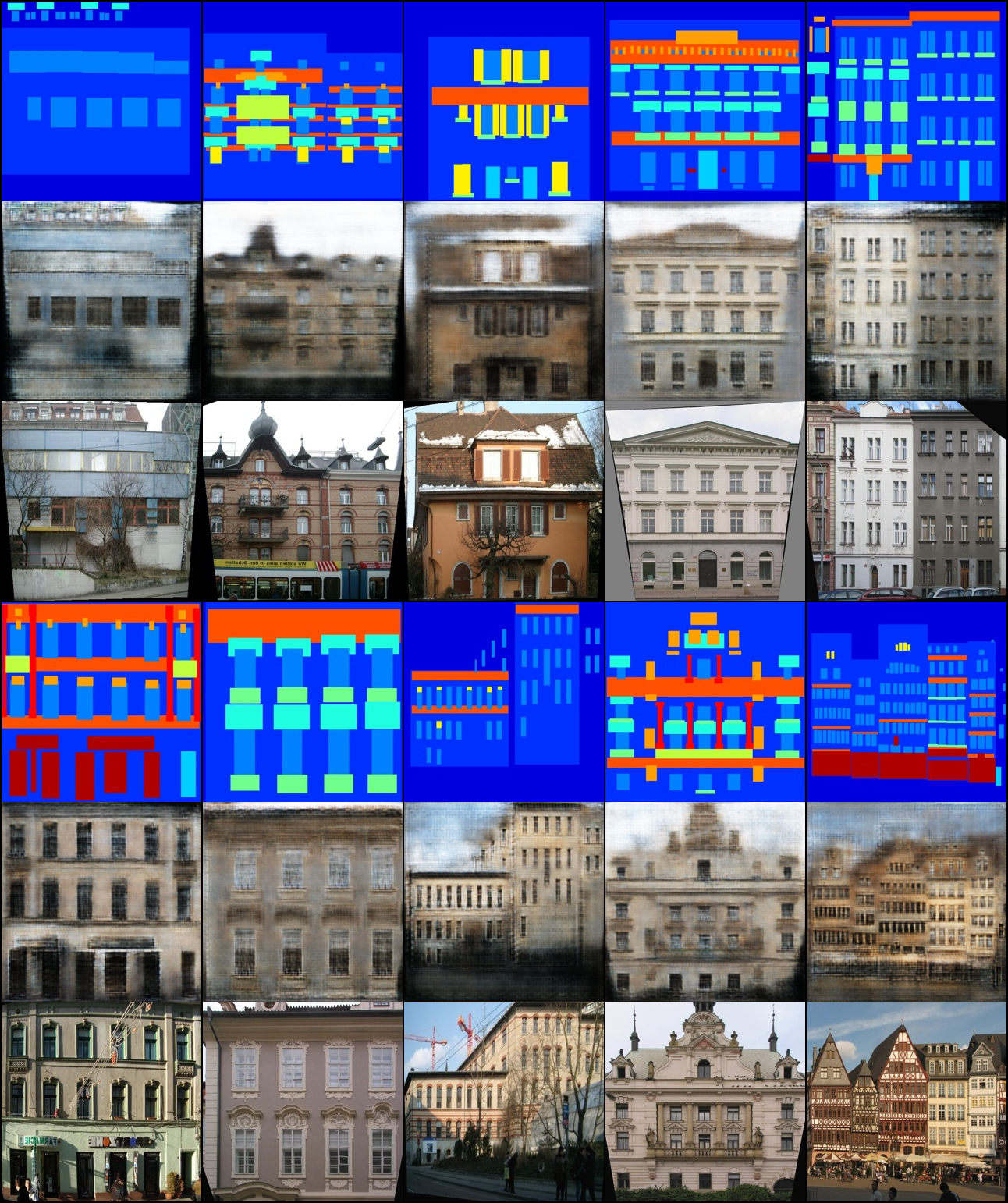

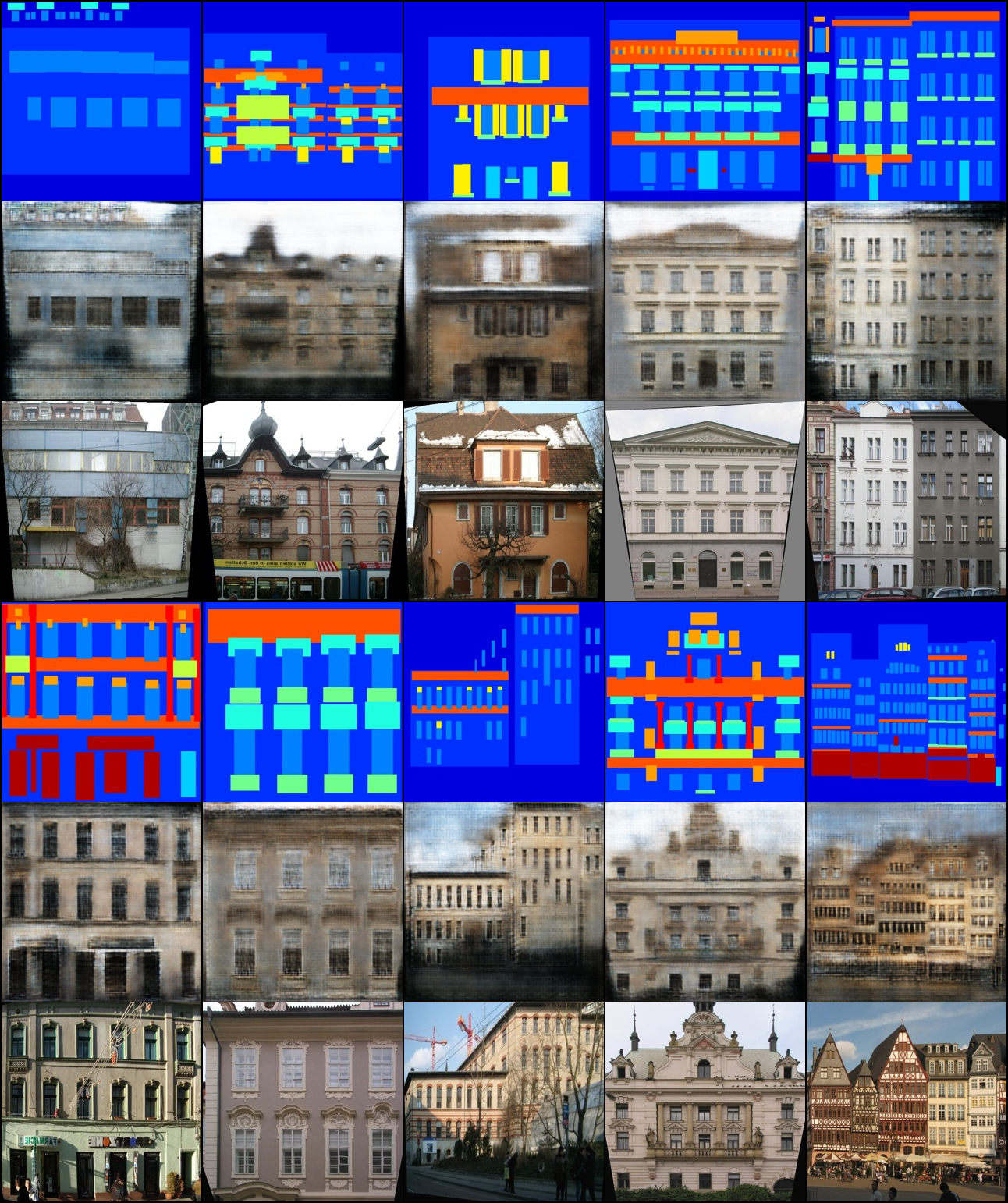

[Epoch 199/200] [D loss: 0.008485] [G pixel loss: 0.177730, adv loss: 0.9065925478935242] [Elapsed time: 10306.36s]- 생성된 이미지 예시를 출력합니다.

from IPython.display import Image

Image('10000.png')

'AI_Bootcamp' 카테고리의 다른 글

| 6주차 Day23 CycleGAN (0) | 2022.02.14 |

|---|---|

| 6주차 Day22 Pix2Pix( CVPR 2017) (0) | 2022.02.10 |

| 6주차 Day21 GAN: Generative Adversarial Networks (0) | 2022.02.07 |

| 4주차 Day20 Lenet, Alexnet, VCG-16-net, Resnet (0) | 2022.02.06 |

| 4주차 Day19 Deep Learning ANN,DNN,CNN 비교 (0) | 2022.02.01 |

반응형

Pix2Pix 실습

- 논문 제목: Image-to-Image Translation with Conditional Adversarial Networks (CVPR 2017)

- 대표적인 이미지간 도메인 변환(Translation) 기술인 Pix2Pix 모델을 학습해보는 실습을 진행합니다.

- 학습 데이터셋: Facade (3 X 256 X 256)

필요한 라이브러리 불러오기

- 실습을 위한 PyTorch 라이브러리를 불러옵니다.

import os

import glob

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import torch

import torch.nn as nn

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from torchvision.utils import save_image학습 데이터셋 불러오기

- 학습을 위해 Facade 데이터셋을 불러옵니다.

%%capture

!wget http://efrosgans.eecs.berkeley.edu/pix2pix/datasets/facades.tar.gz -O facades.tar.gz

!tar -zxvf facades.tar.gz -C ./print("학습 데이터셋 A와 B의 개수:", len(next(os.walk('./facades/train/'))[2]))

print("평가 데이터셋 A와 B의 개수:", len(next(os.walk('./facades/val/'))[2]))

print("테스트 데이터셋 A와 B의 개수:", len(next(os.walk('./facades/test/'))[2]))학습 데이터셋 A와 B의 개수: 400

평가 데이터셋 A와 B의 개수: 100

테스트 데이터셋 A와 B의 개수: 106- 학습 데이터셋을 출력해 봅시다.

# 한 쌍의 이미지 출력(왼쪽은 정답 이미지, 오른쪽은 조건 이미지)

image = Image.open('./facades/train/1.jpg')

print("이미지 크기:", image.size)

plt.imshow(image)

plt.show()이미지 크기: (512, 256)

- 커스텀 데이터셋(Custom Dataset) 클래스를 정의합니다.

class ImageDataset(Dataset):

def __init__(self, root, transforms_=None, mode="train"):

self.transform = transforms_

self.files = sorted(glob.glob(os.path.join(root, mode) + "/*.jpg"))

# 데이터의 개수가 적기 때문에 테스트 데이터를 학습 시기에 사용

if mode == "train":

self.files.extend(sorted(glob.glob(os.path.join(root, "test") + "/*.jpg")))

def __getitem__(self, index):

img = Image.open(self.files[index % len(self.files)])

w, h = img.size

img_A = img.crop((0, 0, w / 2, h)) # 이미지의 왼쪽 절반

img_B = img.crop((w / 2, 0, w, h)) # 이미지의 오른쪽 절반

# 데이터 증진(data augmentation)을 위한 좌우 반전(horizontal flip)

if np.random.random() < 0.5:

img_A = Image.fromarray(np.array(img_A)[:, ::-1, :], "RGB")

img_B = Image.fromarray(np.array(img_B)[:, ::-1, :], "RGB")

img_A = self.transform(img_A)

img_B = self.transform(img_B)

return {"A": img_A, "B": img_B}

def __len__(self):

return len(self.files)transforms_ = transforms.Compose([

transforms.Resize((256, 256), Image.BICUBIC),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

train_dataset = ImageDataset("facades", transforms_=transforms_)

val_dataset = ImageDataset("facades", transforms_=transforms_)

train_dataloader = DataLoader(train_dataset, batch_size=10, shuffle=True, num_workers=4)

val_dataloader = DataLoader(val_dataset, batch_size=10, shuffle=True, num_workers=4)/usr/local/lib/python3.7/dist-packages/torchvision/transforms/transforms.py:288: UserWarning: Argument interpolation should be of type InterpolationMode instead of int. Please, use InterpolationMode enum.

"Argument interpolation should be of type InterpolationMode instead of int. "

/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py:481: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

cpuset_checked))생성자(Generator) 및 판별자(Discriminator) 모델 정의

# U-Net 아키텍처의 다운 샘플링(Down Sampling) 모듈

class UNetDown(nn.Module):

def __init__(self, in_channels, out_channels, normalize=True, dropout=0.0):

super(UNetDown, self).__init__()

# 너비와 높이가 2배씩 감소

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=4, stride=2, padding=1, bias=False)]

if normalize:

layers.append(nn.InstanceNorm2d(out_channels))

layers.append(nn.LeakyReLU(0.2))

if dropout:

layers.append(nn.Dropout(dropout))

self.model = nn.Sequential(*layers)

def forward(self, x):

return self.model(x)

# U-Net 아키텍처의 업 샘플링(Up Sampling) 모듈: Skip Connection 입력 사용

class UNetUp(nn.Module):

def __init__(self, in_channels, out_channels, dropout=0.0):

super(UNetUp, self).__init__()

# 너비와 높이가 2배씩 증가

layers = [nn.ConvTranspose2d(in_channels, out_channels, kernel_size=4, stride=2, padding=1, bias=False)]

layers.append(nn.InstanceNorm2d(out_channels))

layers.append(nn.ReLU(inplace=True))

if dropout:

layers.append(nn.Dropout(dropout))

self.model = nn.Sequential(*layers)

def forward(self, x, skip_input):

x = self.model(x)

x = torch.cat((x, skip_input), 1) # 채널 레벨에서 합치기(concatenation)

return x

# U-Net 생성자(Generator) 아키텍처

class GeneratorUNet(nn.Module):

def __init__(self, in_channels=3, out_channels=3):

super(GeneratorUNet, self).__init__()

self.down1 = UNetDown(in_channels, 64, normalize=False) # 출력: [64 X 128 X 128]

self.down2 = UNetDown(64, 128) # 출력: [128 X 64 X 64]

self.down3 = UNetDown(128, 256) # 출력: [256 X 32 X 32]

self.down4 = UNetDown(256, 512, dropout=0.5) # 출력: [512 X 16 X 16]

self.down5 = UNetDown(512, 512, dropout=0.5) # 출력: [512 X 8 X 8]

self.down6 = UNetDown(512, 512, dropout=0.5) # 출력: [512 X 4 X 4]

self.down7 = UNetDown(512, 512, dropout=0.5) # 출력: [512 X 2 X 2]

self.down8 = UNetDown(512, 512, normalize=False, dropout=0.5) # 출력: [512 X 1 X 1]

# Skip Connection 사용(출력 채널의 크기 X 2 == 다음 입력 채널의 크기)

self.up1 = UNetUp(512, 512, dropout=0.5) # 출력: [1024 X 2 X 2]

self.up2 = UNetUp(1024, 512, dropout=0.5) # 출력: [1024 X 4 X 4]

self.up3 = UNetUp(1024, 512, dropout=0.5) # 출력: [1024 X 8 X 8]

self.up4 = UNetUp(1024, 512, dropout=0.5) # 출력: [1024 X 16 X 16]

self.up5 = UNetUp(1024, 256) # 출력: [512 X 32 X 32]

self.up6 = UNetUp(512, 128) # 출력: [256 X 64 X 64]

self.up7 = UNetUp(256, 64) # 출력: [128 X 128 X 128]

self.final = nn.Sequential(

nn.Upsample(scale_factor=2), # 출력: [128 X 256 X 256]

nn.ZeroPad2d((1, 0, 1, 0)),

nn.Conv2d(128, out_channels, kernel_size=4, padding=1), # 출력: [3 X 256 X 256]

nn.Tanh(),

)

def forward(self, x):

# 인코더부터 디코더까지 순전파하는 U-Net 생성자(Generator)

d1 = self.down1(x)

d2 = self.down2(d1)

d3 = self.down3(d2)

d4 = self.down4(d3)

d5 = self.down5(d4)

d6 = self.down6(d5)

d7 = self.down7(d6)

d8 = self.down8(d7)

u1 = self.up1(d8, d7)

u2 = self.up2(u1, d6)

u3 = self.up3(u2, d5)

u4 = self.up4(u3, d4)

u5 = self.up5(u4, d3)

u6 = self.up6(u5, d2)

u7 = self.up7(u6, d1)

return self.final(u7)

# U-Net 판별자(Discriminator) 아키텍처

class Discriminator(nn.Module):

def __init__(self, in_channels=3):

super(Discriminator, self).__init__()

def discriminator_block(in_channels, out_channels, normalization=True):

# 너비와 높이가 2배씩 감소

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=4, stride=2, padding=1)]

if normalization:

layers.append(nn.InstanceNorm2d(out_channels))

layers.append(nn.LeakyReLU(0.2, inplace=True))

return layers

self.model = nn.Sequential(

# 두 개의 이미지(실제/변환된 이미지, 조건 이미지)를 입력 받으므로 입력 채널의 크기는 2배

*discriminator_block(in_channels * 2, 64, normalization=False), # 출력: [64 X 128 X 128]

*discriminator_block(64, 128), # 출력: [128 X 64 X 64]

*discriminator_block(128, 256), # 출력: [256 X 32 X 32]

*discriminator_block(256, 512), # 출력: [512 X 16 X 16]

nn.ZeroPad2d((1, 0, 1, 0)),

nn.Conv2d(512, 1, kernel_size=4, padding=1, bias=False) # 출력: [1 X 16 X 16]

)

# img_A: 실제/변환된 이미지, img_B: 조건(condition)

def forward(self, img_A, img_B):

# 이미지 두 개를 채널 레벨에서 연결하여(concatenate) 입력 데이터 생성

img_input = torch.cat((img_A, img_B), 1)

return self.model(img_input)모델 학습 및 샘플링

- 학습을 위해 생성자와 판별자 모델을 초기화합니다.

- 적절한 하이퍼 파라미터를 설정합니다.

def weights_init_normal(m):

classname = m.__class__.__name__

if classname.find("Conv") != -1:

torch.nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find("BatchNorm2d") != -1:

torch.nn.init.normal_(m.weight.data, 1.0, 0.02)

torch.nn.init.constant_(m.bias.data, 0.0)

# 생성자(generator)와 판별자(discriminator) 초기화

generator = GeneratorUNet()

discriminator = Discriminator()

generator.cuda()

discriminator.cuda()

# 가중치(weights) 초기화

generator.apply(weights_init_normal)

discriminator.apply(weights_init_normal)

# 손실 함수(loss function)

criterion_GAN = torch.nn.MSELoss()

criterion_pixelwise = torch.nn.L1Loss()

criterion_GAN.cuda()

criterion_pixelwise.cuda()

# 학습률(learning rate) 설정

lr = 0.0002

# 생성자와 판별자를 위한 최적화 함수

optimizer_G = torch.optim.Adam(generator.parameters(), lr=lr, betas=(0.5, 0.999))

optimizer_D = torch.optim.Adam(discriminator.parameters(), lr=lr, betas=(0.5, 0.999))- 모델을 학습하면서 주기적으로 샘플링하여 결과를 확인할 수 있습니다.

import time

n_epochs = 200 # 학습의 횟수(epoch) 설정

sample_interval = 200 # 몇 번의 배치(batch)마다 결과를 출력할 것인지 설정

# 변환된 이미지와 정답 이미지 사이의 L1 픽셀 단위(pixel-wise) 손실 가중치(weight) 파라미터

lambda_pixel = 100

start_time = time.time()

for epoch in range(n_epochs):

for i, batch in enumerate(train_dataloader):

# 모델의 입력(input) 데이터 불러오기

real_A = batch["B"].cuda()

real_B = batch["A"].cuda()

# 진짜(real) 이미지와 가짜(fake) 이미지에 대한 정답 레이블 생성 (너바와 높이를 16씩 나눈 크기)

real = torch.cuda.FloatTensor(real_A.size(0), 1, 16, 16).fill_(1.0) # 진짜(real): 1

fake = torch.cuda.FloatTensor(real_A.size(0), 1, 16, 16).fill_(0.0) # 가짜(fake): 0

""" 생성자(generator)를 학습합니다. """

optimizer_G.zero_grad()

# 이미지 생성

fake_B = generator(real_A)

# 생성자(generator)의 손실(loss) 값 계산

loss_GAN = criterion_GAN(discriminator(fake_B, real_A), real)

# 픽셀 단위(pixel-wise) L1 손실 값 계산

loss_pixel = criterion_pixelwise(fake_B, real_B)

# 최종적인 손실(loss)

loss_G = loss_GAN + lambda_pixel * loss_pixel

# 생성자(generator) 업데이트

loss_G.backward()

optimizer_G.step()

""" 판별자(discriminator)를 학습합니다. """

optimizer_D.zero_grad()

# 판별자(discriminator)의 손실(loss) 값 계산

loss_real = criterion_GAN(discriminator(real_B, real_A), real) # 조건(condition): real_A

loss_fake = criterion_GAN(discriminator(fake_B.detach(), real_A), fake)

loss_D = (loss_real + loss_fake) / 2

# 판별자(discriminator) 업데이트

loss_D.backward()

optimizer_D.step()

done = epoch * len(train_dataloader) + i

if done % sample_interval == 0:

imgs = next(iter(val_dataloader)) # 10개의 이미지를 추출해 생성

real_A = imgs["B"].cuda()

real_B = imgs["A"].cuda()

fake_B = generator(real_A)

# real_A: 조건(condition), fake_B: 변환된 이미지(translated image), real_B: 정답 이미지

img_sample = torch.cat((real_A.data, fake_B.data, real_B.data), -2) # 높이(height)를 기준으로 이미지를 연결하기

save_image(img_sample, f"{done}.png", nrow=5, normalize=True)

# 하나의 epoch이 끝날 때마다 로그(log) 출력

print(f"[Epoch {epoch}/{n_epochs}] [D loss: {loss_D.item():.6f}] [G pixel loss: {loss_pixel.item():.6f}, adv loss: {loss_GAN.item()}] [Elapsed time: {time.time() - start_time:.2f}s]")/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py:481: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

cpuset_checked))

[Epoch 0/200] [D loss: 0.560331] [G pixel loss: 0.329641, adv loss: 0.7809144854545593] [Elapsed time: 52.55s]

[Epoch 1/200] [D loss: 0.225210] [G pixel loss: 0.391308, adv loss: 0.8067110776901245] [Elapsed time: 104.06s]

[Epoch 2/200] [D loss: 0.119736] [G pixel loss: 0.342993, adv loss: 0.9893133640289307] [Elapsed time: 155.51s]

[Epoch 3/200] [D loss: 0.060683] [G pixel loss: 0.366208, adv loss: 1.0320591926574707] [Elapsed time: 208.06s]

[Epoch 4/200] [D loss: 0.070895] [G pixel loss: 0.325048, adv loss: 1.0521727800369263] [Elapsed time: 259.46s]

[Epoch 5/200] [D loss: 0.096604] [G pixel loss: 0.349445, adv loss: 1.1281390190124512] [Elapsed time: 310.94s]

[Epoch 6/200] [D loss: 0.069620] [G pixel loss: 0.333463, adv loss: 0.9519507884979248] [Elapsed time: 362.44s]

[Epoch 7/200] [D loss: 0.057559] [G pixel loss: 0.325070, adv loss: 1.1611394882202148] [Elapsed time: 414.73s]

[Epoch 8/200] [D loss: 0.045692] [G pixel loss: 0.322076, adv loss: 0.9827876091003418] [Elapsed time: 466.17s]

[Epoch 9/200] [D loss: 0.151312] [G pixel loss: 0.358122, adv loss: 1.4437382221221924] [Elapsed time: 517.91s]

[Epoch 10/200] [D loss: 0.077470] [G pixel loss: 0.306726, adv loss: 0.6923846006393433] [Elapsed time: 569.27s]

[Epoch 11/200] [D loss: 0.192081] [G pixel loss: 0.296288, adv loss: 1.4209017753601074] [Elapsed time: 621.59s]

[Epoch 12/200] [D loss: 0.063872] [G pixel loss: 0.324469, adv loss: 0.6795710325241089] [Elapsed time: 673.34s]

[Epoch 13/200] [D loss: 0.102404] [G pixel loss: 0.362862, adv loss: 0.4977836310863495] [Elapsed time: 724.73s]

[Epoch 14/200] [D loss: 0.121900] [G pixel loss: 0.375523, adv loss: 0.4150375723838806] [Elapsed time: 776.47s]

[Epoch 15/200] [D loss: 0.113150] [G pixel loss: 0.362901, adv loss: 1.6324083805084229] [Elapsed time: 829.01s]

[Epoch 16/200] [D loss: 0.150540] [G pixel loss: 0.325291, adv loss: 0.402769535779953] [Elapsed time: 880.57s]

[Epoch 17/200] [D loss: 0.083624] [G pixel loss: 0.274319, adv loss: 0.5665050745010376] [Elapsed time: 931.91s]

[Epoch 18/200] [D loss: 0.046647] [G pixel loss: 0.292543, adv loss: 0.917290210723877] [Elapsed time: 983.66s]

[Epoch 19/200] [D loss: 0.105786] [G pixel loss: 0.335462, adv loss: 1.3565210103988647] [Elapsed time: 1036.06s]

[Epoch 20/200] [D loss: 0.059561] [G pixel loss: 0.362326, adv loss: 1.1688989400863647] [Elapsed time: 1087.80s]

[Epoch 21/200] [D loss: 0.034316] [G pixel loss: 0.321345, adv loss: 0.8693038821220398] [Elapsed time: 1139.12s]

[Epoch 22/200] [D loss: 0.043167] [G pixel loss: 0.285502, adv loss: 0.8347434997558594] [Elapsed time: 1190.45s]

[Epoch 23/200] [D loss: 0.098935] [G pixel loss: 0.331274, adv loss: 0.5105571150779724] [Elapsed time: 1242.71s]

[Epoch 24/200] [D loss: 0.035406] [G pixel loss: 0.345680, adv loss: 0.7654179334640503] [Elapsed time: 1294.05s]

[Epoch 25/200] [D loss: 0.057263] [G pixel loss: 0.305916, adv loss: 0.6176649332046509] [Elapsed time: 1345.79s]

[Epoch 26/200] [D loss: 0.041583] [G pixel loss: 0.359302, adv loss: 1.0313990116119385] [Elapsed time: 1397.18s]

[Epoch 27/200] [D loss: 0.099395] [G pixel loss: 0.292495, adv loss: 1.4479920864105225] [Elapsed time: 1449.49s]

[Epoch 28/200] [D loss: 0.065432] [G pixel loss: 0.268309, adv loss: 0.8113459348678589] [Elapsed time: 1500.80s]

[Epoch 29/200] [D loss: 0.037296] [G pixel loss: 0.308611, adv loss: 1.0982780456542969] [Elapsed time: 1552.54s]

[Epoch 30/200] [D loss: 0.064119] [G pixel loss: 0.264285, adv loss: 1.2817949056625366] [Elapsed time: 1603.88s]

[Epoch 31/200] [D loss: 0.040339] [G pixel loss: 0.293402, adv loss: 0.7894832491874695] [Elapsed time: 1656.20s]

[Epoch 32/200] [D loss: 0.037840] [G pixel loss: 0.266078, adv loss: 1.0674517154693604] [Elapsed time: 1707.93s]

[Epoch 33/200] [D loss: 0.029823] [G pixel loss: 0.276577, adv loss: 1.0156080722808838] [Elapsed time: 1759.70s]

[Epoch 34/200] [D loss: 0.039911] [G pixel loss: 0.245282, adv loss: 0.8676483035087585] [Elapsed time: 1811.09s]

[Epoch 35/200] [D loss: 0.068004] [G pixel loss: 0.285075, adv loss: 1.2472418546676636] [Elapsed time: 1863.44s]

[Epoch 36/200] [D loss: 0.035850] [G pixel loss: 0.250332, adv loss: 0.8476670980453491] [Elapsed time: 1914.73s]

[Epoch 37/200] [D loss: 0.052470] [G pixel loss: 0.239629, adv loss: 1.1259958744049072] [Elapsed time: 1966.05s]

[Epoch 38/200] [D loss: 0.046650] [G pixel loss: 0.310080, adv loss: 0.7120026350021362] [Elapsed time: 2017.34s]

[Epoch 39/200] [D loss: 0.043057] [G pixel loss: 0.250561, adv loss: 1.0331612825393677] [Elapsed time: 2069.61s]

[Epoch 40/200] [D loss: 0.075888] [G pixel loss: 0.275628, adv loss: 1.0048232078552246] [Elapsed time: 2120.96s]

[Epoch 41/200] [D loss: 0.019193] [G pixel loss: 0.287058, adv loss: 1.0274113416671753] [Elapsed time: 2172.25s]

[Epoch 42/200] [D loss: 0.056119] [G pixel loss: 0.239393, adv loss: 0.8167617321014404] [Elapsed time: 2223.56s]

[Epoch 43/200] [D loss: 0.015883] [G pixel loss: 0.267198, adv loss: 0.9468421339988708] [Elapsed time: 2275.82s]

[Epoch 44/200] [D loss: 0.018044] [G pixel loss: 0.244496, adv loss: 0.8832151293754578] [Elapsed time: 2327.18s]

[Epoch 45/200] [D loss: 0.235572] [G pixel loss: 0.238352, adv loss: 1.3980501890182495] [Elapsed time: 2378.48s]

[Epoch 46/200] [D loss: 0.063973] [G pixel loss: 0.248627, adv loss: 0.6057321429252625] [Elapsed time: 2429.77s]

[Epoch 47/200] [D loss: 0.039086] [G pixel loss: 0.271331, adv loss: 0.7949753999710083] [Elapsed time: 2481.91s]

[Epoch 48/200] [D loss: 0.024581] [G pixel loss: 0.236724, adv loss: 1.1094224452972412] [Elapsed time: 2533.39s]

[Epoch 49/200] [D loss: 0.030717] [G pixel loss: 0.232298, adv loss: 1.0013574361801147] [Elapsed time: 2584.71s]

[Epoch 50/200] [D loss: 0.037827] [G pixel loss: 0.232936, adv loss: 0.7798885107040405] [Elapsed time: 2637.19s]

[Epoch 51/200] [D loss: 0.197720] [G pixel loss: 0.249178, adv loss: 1.7204818725585938] [Elapsed time: 2688.46s]

[Epoch 52/200] [D loss: 0.045382] [G pixel loss: 0.229518, adv loss: 0.6314181089401245] [Elapsed time: 2739.82s]

[Epoch 53/200] [D loss: 0.031806] [G pixel loss: 0.210802, adv loss: 0.8526821136474609] [Elapsed time: 2791.08s]

[Epoch 54/200] [D loss: 0.025265] [G pixel loss: 0.238826, adv loss: 1.0513064861297607] [Elapsed time: 2843.27s]

[Epoch 55/200] [D loss: 0.068328] [G pixel loss: 0.244290, adv loss: 1.0136446952819824] [Elapsed time: 2894.73s]

[Epoch 56/200] [D loss: 0.070652] [G pixel loss: 0.234325, adv loss: 1.2297039031982422] [Elapsed time: 2946.00s]

[Epoch 57/200] [D loss: 0.011286] [G pixel loss: 0.252570, adv loss: 0.9364079236984253] [Elapsed time: 2997.37s]

[Epoch 58/200] [D loss: 0.059667] [G pixel loss: 0.254764, adv loss: 0.5805401802062988] [Elapsed time: 3049.54s]

[Epoch 59/200] [D loss: 0.015108] [G pixel loss: 0.251916, adv loss: 0.8374917507171631] [Elapsed time: 3100.90s]

[Epoch 60/200] [D loss: 0.022445] [G pixel loss: 0.241719, adv loss: 0.970120906829834] [Elapsed time: 3152.20s]

[Epoch 61/200] [D loss: 0.039313] [G pixel loss: 0.232719, adv loss: 0.8198463916778564] [Elapsed time: 3203.64s]

[Epoch 62/200] [D loss: 0.142251] [G pixel loss: 0.223315, adv loss: 1.3460773229599] [Elapsed time: 3255.82s]

[Epoch 63/200] [D loss: 0.015553] [G pixel loss: 0.222825, adv loss: 0.9202275276184082] [Elapsed time: 3307.14s]

[Epoch 64/200] [D loss: 0.033355] [G pixel loss: 0.226606, adv loss: 0.7465857863426208] [Elapsed time: 3358.52s]

[Epoch 65/200] [D loss: 0.013728] [G pixel loss: 0.239810, adv loss: 0.9405672550201416] [Elapsed time: 3409.86s]

[Epoch 66/200] [D loss: 0.016313] [G pixel loss: 0.181129, adv loss: 1.0011255741119385] [Elapsed time: 3462.21s]

[Epoch 67/200] [D loss: 0.025285] [G pixel loss: 0.206227, adv loss: 0.7360242605209351] [Elapsed time: 3513.73s]

[Epoch 68/200] [D loss: 0.255739] [G pixel loss: 0.220748, adv loss: 1.6242244243621826] [Elapsed time: 3565.03s]

[Epoch 69/200] [D loss: 0.019811] [G pixel loss: 0.198161, adv loss: 1.0887689590454102] [Elapsed time: 3616.33s]

[Epoch 70/200] [D loss: 0.075466] [G pixel loss: 0.223438, adv loss: 0.543390154838562] [Elapsed time: 3668.60s]

[Epoch 71/200] [D loss: 0.013542] [G pixel loss: 0.238059, adv loss: 1.006549596786499] [Elapsed time: 3719.94s]

[Epoch 72/200] [D loss: 0.077583] [G pixel loss: 0.226773, adv loss: 1.3163520097732544] [Elapsed time: 3771.39s]

[Epoch 73/200] [D loss: 0.013092] [G pixel loss: 0.238897, adv loss: 1.190638780593872] [Elapsed time: 3822.70s]

[Epoch 74/200] [D loss: 0.009091] [G pixel loss: 0.234385, adv loss: 0.9991838335990906] [Elapsed time: 3874.98s]

[Epoch 75/200] [D loss: 0.018546] [G pixel loss: 0.202138, adv loss: 1.0036548376083374] [Elapsed time: 3926.32s]

[Epoch 76/200] [D loss: 0.012390] [G pixel loss: 0.273573, adv loss: 1.196258783340454] [Elapsed time: 3977.60s]

[Epoch 77/200] [D loss: 0.053398] [G pixel loss: 0.204982, adv loss: 0.9612450003623962] [Elapsed time: 4028.89s]

[Epoch 78/200] [D loss: 0.023748] [G pixel loss: 0.252105, adv loss: 1.034123182296753] [Elapsed time: 4081.15s]

[Epoch 79/200] [D loss: 0.041500] [G pixel loss: 0.234307, adv loss: 0.7551460266113281] [Elapsed time: 4132.41s]

[Epoch 80/200] [D loss: 0.011924] [G pixel loss: 0.205748, adv loss: 0.8598144054412842] [Elapsed time: 4183.69s]

[Epoch 81/200] [D loss: 0.022264] [G pixel loss: 0.195362, adv loss: 0.7817422151565552] [Elapsed time: 4234.97s]

[Epoch 82/200] [D loss: 0.022248] [G pixel loss: 0.210430, adv loss: 0.9987583160400391] [Elapsed time: 4287.27s]

[Epoch 83/200] [D loss: 0.020435] [G pixel loss: 0.194591, adv loss: 0.8743090629577637] [Elapsed time: 4338.59s]

[Epoch 84/200] [D loss: 0.017068] [G pixel loss: 0.238381, adv loss: 1.213318109512329] [Elapsed time: 4389.87s]

[Epoch 85/200] [D loss: 0.009264] [G pixel loss: 0.235362, adv loss: 1.0141481161117554] [Elapsed time: 4441.21s]

[Epoch 86/200] [D loss: 0.007933] [G pixel loss: 0.197306, adv loss: 1.0975708961486816] [Elapsed time: 4493.47s]

[Epoch 87/200] [D loss: 0.017420] [G pixel loss: 0.186257, adv loss: 1.0237855911254883] [Elapsed time: 4544.76s]

[Epoch 88/200] [D loss: 0.009214] [G pixel loss: 0.176523, adv loss: 0.8813095092773438] [Elapsed time: 4596.08s]

[Epoch 89/200] [D loss: 0.037060] [G pixel loss: 0.208598, adv loss: 1.0207114219665527] [Elapsed time: 4647.37s]

[Epoch 90/200] [D loss: 0.087688] [G pixel loss: 0.218045, adv loss: 0.9575861692428589] [Elapsed time: 4699.67s]

[Epoch 91/200] [D loss: 0.005023] [G pixel loss: 0.201437, adv loss: 0.9658225774765015] [Elapsed time: 4750.97s]

[Epoch 92/200] [D loss: 0.010896] [G pixel loss: 0.228045, adv loss: 0.937142014503479] [Elapsed time: 4802.32s]

[Epoch 93/200] [D loss: 0.007608] [G pixel loss: 0.220617, adv loss: 1.0633344650268555] [Elapsed time: 4853.62s]

[Epoch 94/200] [D loss: 0.006051] [G pixel loss: 0.177682, adv loss: 1.074628233909607] [Elapsed time: 4905.76s]

[Epoch 95/200] [D loss: 0.008426] [G pixel loss: 0.209551, adv loss: 1.0892541408538818] [Elapsed time: 4957.07s]

[Epoch 96/200] [D loss: 0.007335] [G pixel loss: 0.171409, adv loss: 0.9790999889373779] [Elapsed time: 5008.52s]

[Epoch 97/200] [D loss: 0.007518] [G pixel loss: 0.193798, adv loss: 1.0433874130249023] [Elapsed time: 5059.98s]

[Epoch 98/200] [D loss: 0.003709] [G pixel loss: 0.208771, adv loss: 0.9659861326217651] [Elapsed time: 5112.29s]

[Epoch 99/200] [D loss: 0.011339] [G pixel loss: 0.206002, adv loss: 1.0790441036224365] [Elapsed time: 5163.60s]

[Epoch 100/200] [D loss: 0.004710] [G pixel loss: 0.219063, adv loss: 0.9879229068756104] [Elapsed time: 5214.91s]

[Epoch 101/200] [D loss: 0.003712] [G pixel loss: 0.212145, adv loss: 0.9768645167350769] [Elapsed time: 5267.23s]

[Epoch 102/200] [D loss: 0.007433] [G pixel loss: 0.172307, adv loss: 1.031888484954834] [Elapsed time: 5318.52s]

[Epoch 103/200] [D loss: 0.020805] [G pixel loss: 0.191080, adv loss: 1.0496633052825928] [Elapsed time: 5369.82s]

[Epoch 104/200] [D loss: 0.007247] [G pixel loss: 0.206618, adv loss: 0.9623000025749207] [Elapsed time: 5420.75s]

[Epoch 105/200] [D loss: 0.003970] [G pixel loss: 0.219859, adv loss: 1.0321054458618164] [Elapsed time: 5472.85s]

[Epoch 106/200] [D loss: 0.003936] [G pixel loss: 0.173175, adv loss: 0.928221583366394] [Elapsed time: 5523.82s]

[Epoch 107/200] [D loss: 0.003428] [G pixel loss: 0.200371, adv loss: 0.9922566413879395] [Elapsed time: 5574.93s]

[Epoch 108/200] [D loss: 0.001910] [G pixel loss: 0.186697, adv loss: 1.0216944217681885] [Elapsed time: 5625.93s]

[Epoch 109/200] [D loss: 0.031960] [G pixel loss: 0.178305, adv loss: 0.8815109133720398] [Elapsed time: 5678.04s]

[Epoch 110/200] [D loss: 0.008342] [G pixel loss: 0.186416, adv loss: 1.0286873579025269] [Elapsed time: 5729.12s]

[Epoch 111/200] [D loss: 0.002166] [G pixel loss: 0.199634, adv loss: 1.01239013671875] [Elapsed time: 5780.24s]

[Epoch 112/200] [D loss: 0.004280] [G pixel loss: 0.176329, adv loss: 0.9297403693199158] [Elapsed time: 5831.36s]

[Epoch 113/200] [D loss: 0.063017] [G pixel loss: 0.177387, adv loss: 1.0849645137786865] [Elapsed time: 5883.35s]

[Epoch 114/200] [D loss: 0.003249] [G pixel loss: 0.194567, adv loss: 1.00436532497406] [Elapsed time: 5934.61s]

[Epoch 115/200] [D loss: 0.005109] [G pixel loss: 0.189428, adv loss: 0.9345830678939819] [Elapsed time: 5985.77s]

[Epoch 116/200] [D loss: 0.004331] [G pixel loss: 0.179149, adv loss: 0.9682204127311707] [Elapsed time: 6036.99s]

[Epoch 117/200] [D loss: 0.003580] [G pixel loss: 0.188151, adv loss: 1.0148134231567383] [Elapsed time: 6089.29s]

[Epoch 118/200] [D loss: 0.003836] [G pixel loss: 0.189364, adv loss: 1.067288875579834] [Elapsed time: 6140.50s]

[Epoch 119/200] [D loss: 0.005717] [G pixel loss: 0.176801, adv loss: 1.0278544425964355] [Elapsed time: 6191.66s]

[Epoch 120/200] [D loss: 0.244995] [G pixel loss: 0.195286, adv loss: 0.3126675486564636] [Elapsed time: 6242.71s]

[Epoch 121/200] [D loss: 0.014503] [G pixel loss: 0.150147, adv loss: 0.9850532412528992] [Elapsed time: 6294.91s]

[Epoch 122/200] [D loss: 0.012993] [G pixel loss: 0.188707, adv loss: 0.8494478464126587] [Elapsed time: 6346.08s]

[Epoch 123/200] [D loss: 0.003692] [G pixel loss: 0.196611, adv loss: 1.022415041923523] [Elapsed time: 6397.33s]

[Epoch 124/200] [D loss: 0.002938] [G pixel loss: 0.186606, adv loss: 0.9705221652984619] [Elapsed time: 6448.44s]

[Epoch 125/200] [D loss: 0.003459] [G pixel loss: 0.157643, adv loss: 0.998089075088501] [Elapsed time: 6500.63s]

[Epoch 126/200] [D loss: 0.003321] [G pixel loss: 0.180089, adv loss: 0.9894605875015259] [Elapsed time: 6551.80s]

[Epoch 127/200] [D loss: 0.003319] [G pixel loss: 0.173219, adv loss: 1.07439124584198] [Elapsed time: 6602.92s]

[Epoch 128/200] [D loss: 0.004062] [G pixel loss: 0.168607, adv loss: 0.9516044855117798] [Elapsed time: 6654.03s]

[Epoch 129/200] [D loss: 0.001799] [G pixel loss: 0.182086, adv loss: 1.0036576986312866] [Elapsed time: 6706.22s]

[Epoch 130/200] [D loss: 0.003700] [G pixel loss: 0.182998, adv loss: 0.9948679804801941] [Elapsed time: 6757.46s]

[Epoch 131/200] [D loss: 0.002051] [G pixel loss: 0.184224, adv loss: 1.0148701667785645] [Elapsed time: 6808.62s]

[Epoch 132/200] [D loss: 0.002050] [G pixel loss: 0.161753, adv loss: 1.0258421897888184] [Elapsed time: 6859.87s]

[Epoch 133/200] [D loss: 0.004180] [G pixel loss: 0.180295, adv loss: 0.9562324285507202] [Elapsed time: 6912.05s]

[Epoch 134/200] [D loss: 0.007223] [G pixel loss: 0.154781, adv loss: 1.0025012493133545] [Elapsed time: 6963.23s]

[Epoch 135/200] [D loss: 0.002015] [G pixel loss: 0.160908, adv loss: 1.0458091497421265] [Elapsed time: 7014.34s]

[Epoch 136/200] [D loss: 0.002179] [G pixel loss: 0.197046, adv loss: 0.9630111455917358] [Elapsed time: 7065.59s]

[Epoch 137/200] [D loss: 0.007557] [G pixel loss: 0.175208, adv loss: 0.9528471827507019] [Elapsed time: 7117.76s]

[Epoch 138/200] [D loss: 0.001691] [G pixel loss: 0.178104, adv loss: 0.9880306124687195] [Elapsed time: 7169.06s]

[Epoch 139/200] [D loss: 0.002005] [G pixel loss: 0.160446, adv loss: 0.9678410887718201] [Elapsed time: 7220.37s]

[Epoch 140/200] [D loss: 0.002810] [G pixel loss: 0.174819, adv loss: 1.031069040298462] [Elapsed time: 7271.57s]

[Epoch 141/200] [D loss: 0.001502] [G pixel loss: 0.154752, adv loss: 0.9971701502799988] [Elapsed time: 7323.67s]

[Epoch 142/200] [D loss: 0.006515] [G pixel loss: 0.189928, adv loss: 1.035855770111084] [Elapsed time: 7374.91s]

[Epoch 143/200] [D loss: 0.004847] [G pixel loss: 0.165924, adv loss: 0.9961034655570984] [Elapsed time: 7425.99s]

[Epoch 144/200] [D loss: 0.009044] [G pixel loss: 0.162621, adv loss: 0.8941065669059753] [Elapsed time: 7477.21s]

[Epoch 145/200] [D loss: 0.003162] [G pixel loss: 0.171194, adv loss: 0.9725656509399414] [Elapsed time: 7529.38s]

[Epoch 146/200] [D loss: 0.024480] [G pixel loss: 0.185342, adv loss: 1.051523208618164] [Elapsed time: 7580.64s]

[Epoch 147/200] [D loss: 0.002871] [G pixel loss: 0.187870, adv loss: 1.0537431240081787] [Elapsed time: 7631.72s]

[Epoch 148/200] [D loss: 0.002199] [G pixel loss: 0.189337, adv loss: 0.9619858264923096] [Elapsed time: 7682.78s]

[Epoch 149/200] [D loss: 0.001067] [G pixel loss: 0.164013, adv loss: 0.9858932495117188] [Elapsed time: 7735.05s]

[Epoch 150/200] [D loss: 0.001779] [G pixel loss: 0.171176, adv loss: 1.005223035812378] [Elapsed time: 7786.22s]

[Epoch 151/200] [D loss: 0.001912] [G pixel loss: 0.158629, adv loss: 0.9989414215087891] [Elapsed time: 7837.20s]

[Epoch 152/200] [D loss: 0.008751] [G pixel loss: 0.161523, adv loss: 0.9668362140655518] [Elapsed time: 7889.48s]

[Epoch 153/200] [D loss: 0.001939] [G pixel loss: 0.175073, adv loss: 0.9961204528808594] [Elapsed time: 7940.77s]

[Epoch 154/200] [D loss: 0.009195] [G pixel loss: 0.169245, adv loss: 1.1206494569778442] [Elapsed time: 7991.93s]

[Epoch 155/200] [D loss: 0.014832] [G pixel loss: 0.181868, adv loss: 0.820640504360199] [Elapsed time: 8043.18s]

[Epoch 156/200] [D loss: 0.002586] [G pixel loss: 0.155320, adv loss: 1.0349605083465576] [Elapsed time: 8095.54s]

[Epoch 157/200] [D loss: 0.003544] [G pixel loss: 0.200790, adv loss: 1.0289254188537598] [Elapsed time: 8146.79s]

[Epoch 158/200] [D loss: 0.254352] [G pixel loss: 0.159031, adv loss: 0.27421003580093384] [Elapsed time: 8198.09s]

[Epoch 159/200] [D loss: 0.219451] [G pixel loss: 0.169825, adv loss: 0.2683924436569214] [Elapsed time: 8249.27s]

[Epoch 160/200] [D loss: 0.184952] [G pixel loss: 0.168947, adv loss: 0.7836729884147644] [Elapsed time: 8301.47s]

[Epoch 161/200] [D loss: 0.259462] [G pixel loss: 0.177259, adv loss: 0.456488698720932] [Elapsed time: 8352.71s]

[Epoch 162/200] [D loss: 0.191785] [G pixel loss: 0.158424, adv loss: 0.2807987928390503] [Elapsed time: 8403.86s]

[Epoch 163/200] [D loss: 0.155810] [G pixel loss: 0.206074, adv loss: 0.33438992500305176] [Elapsed time: 8454.98s]

[Epoch 164/200] [D loss: 0.076625] [G pixel loss: 0.158268, adv loss: 0.5218112468719482] [Elapsed time: 8507.11s]

[Epoch 165/200] [D loss: 0.118348] [G pixel loss: 0.190158, adv loss: 0.39571043848991394] [Elapsed time: 8558.27s]

[Epoch 166/200] [D loss: 0.076636] [G pixel loss: 0.152891, adv loss: 0.8566246032714844] [Elapsed time: 8609.57s]

[Epoch 167/200] [D loss: 0.091659] [G pixel loss: 0.172221, adv loss: 0.4994926452636719] [Elapsed time: 8660.83s]

[Epoch 168/200] [D loss: 0.127160] [G pixel loss: 0.168381, adv loss: 0.4794643521308899] [Elapsed time: 8713.14s]

[Epoch 169/200] [D loss: 0.059232] [G pixel loss: 0.175637, adv loss: 1.1650193929672241] [Elapsed time: 8764.31s]

[Epoch 170/200] [D loss: 0.063739] [G pixel loss: 0.183563, adv loss: 0.6148388385772705] [Elapsed time: 8815.50s]

[Epoch 171/200] [D loss: 0.023040] [G pixel loss: 0.187005, adv loss: 0.8183310031890869] [Elapsed time: 8866.76s]

[Epoch 172/200] [D loss: 0.030206] [G pixel loss: 0.164602, adv loss: 0.8082995414733887] [Elapsed time: 8918.90s]

[Epoch 173/200] [D loss: 0.049121] [G pixel loss: 0.189175, adv loss: 0.8937274813652039] [Elapsed time: 8970.03s]

[Epoch 174/200] [D loss: 0.038261] [G pixel loss: 0.168446, adv loss: 0.6209759712219238] [Elapsed time: 9021.15s]

[Epoch 175/200] [D loss: 0.035395] [G pixel loss: 0.175294, adv loss: 0.6679251194000244] [Elapsed time: 9072.46s]

[Epoch 176/200] [D loss: 0.033775] [G pixel loss: 0.165349, adv loss: 0.7349603176116943] [Elapsed time: 9124.63s]

[Epoch 177/200] [D loss: 0.020501] [G pixel loss: 0.174264, adv loss: 0.8930066823959351] [Elapsed time: 9175.89s]

[Epoch 178/200] [D loss: 0.089944] [G pixel loss: 0.168758, adv loss: 0.9510349035263062] [Elapsed time: 9227.18s]

[Epoch 179/200] [D loss: 0.022041] [G pixel loss: 0.161430, adv loss: 0.7917999029159546] [Elapsed time: 9278.48s]

[Epoch 180/200] [D loss: 0.028426] [G pixel loss: 0.168608, adv loss: 0.6909393072128296] [Elapsed time: 9330.58s]

[Epoch 181/200] [D loss: 0.018376] [G pixel loss: 0.180802, adv loss: 1.0058307647705078] [Elapsed time: 9381.78s]

[Epoch 182/200] [D loss: 0.020922] [G pixel loss: 0.167025, adv loss: 1.0458407402038574] [Elapsed time: 9432.95s]

[Epoch 183/200] [D loss: 0.041034] [G pixel loss: 0.166084, adv loss: 0.806882381439209] [Elapsed time: 9484.21s]

[Epoch 184/200] [D loss: 0.028059] [G pixel loss: 0.174010, adv loss: 0.8454649448394775] [Elapsed time: 9536.38s]

[Epoch 185/200] [D loss: 0.015850] [G pixel loss: 0.154059, adv loss: 1.0488051176071167] [Elapsed time: 9587.67s]

[Epoch 186/200] [D loss: 0.059642] [G pixel loss: 0.175449, adv loss: 0.9773682355880737] [Elapsed time: 9638.93s]

[Epoch 187/200] [D loss: 0.018942] [G pixel loss: 0.171920, adv loss: 1.0830328464508057] [Elapsed time: 9690.10s]

[Epoch 188/200] [D loss: 0.014164] [G pixel loss: 0.156902, adv loss: 1.0089199542999268] [Elapsed time: 9742.29s]

[Epoch 189/200] [D loss: 0.014628] [G pixel loss: 0.163402, adv loss: 0.7964131236076355] [Elapsed time: 9793.41s]

[Epoch 190/200] [D loss: 0.013994] [G pixel loss: 0.160755, adv loss: 0.9182969927787781] [Elapsed time: 9844.65s]

[Epoch 191/200] [D loss: 0.074617] [G pixel loss: 0.173578, adv loss: 0.5211181640625] [Elapsed time: 9895.92s]

[Epoch 192/200] [D loss: 0.011411] [G pixel loss: 0.171053, adv loss: 1.0480167865753174] [Elapsed time: 9947.94s]

[Epoch 193/200] [D loss: 0.006861] [G pixel loss: 0.169426, adv loss: 1.0266128778457642] [Elapsed time: 9998.81s]

[Epoch 194/200] [D loss: 0.016326] [G pixel loss: 0.139121, adv loss: 0.9775941371917725] [Elapsed time: 10049.83s]

[Epoch 195/200] [D loss: 0.010289] [G pixel loss: 0.164239, adv loss: 0.8970546722412109] [Elapsed time: 10100.88s]

[Epoch 196/200] [D loss: 0.019345] [G pixel loss: 0.176286, adv loss: 0.8734278678894043] [Elapsed time: 10153.18s]

[Epoch 197/200] [D loss: 0.009058] [G pixel loss: 0.161024, adv loss: 0.9567171931266785] [Elapsed time: 10204.25s]

[Epoch 198/200] [D loss: 0.023307] [G pixel loss: 0.153450, adv loss: 1.1096452474594116] [Elapsed time: 10255.27s]

[Epoch 199/200] [D loss: 0.008485] [G pixel loss: 0.177730, adv loss: 0.9065925478935242] [Elapsed time: 10306.36s]- 생성된 이미지 예시를 출력합니다.

from IPython.display import Image

Image('10000.png')

'AI_Bootcamp' 카테고리의 다른 글

| 6주차 Day23 CycleGAN (0) | 2022.02.14 |

|---|---|

| 6주차 Day22 Pix2Pix( CVPR 2017) (0) | 2022.02.10 |

| 6주차 Day21 GAN: Generative Adversarial Networks (0) | 2022.02.07 |

| 4주차 Day20 Lenet, Alexnet, VCG-16-net, Resnet (0) | 2022.02.06 |

| 4주차 Day19 Deep Learning ANN,DNN,CNN 비교 (0) | 2022.02.01 |